#importing necessary modules

import os

import numpy as np

import matplotlib.pyplot as plt

from scipy import stats

random_state = 42 This tutorial is borrowed from Jake VanderPlas’s example of SVM in his notebook: Python Data Science Handbook

Motivation for Support Vector Machines

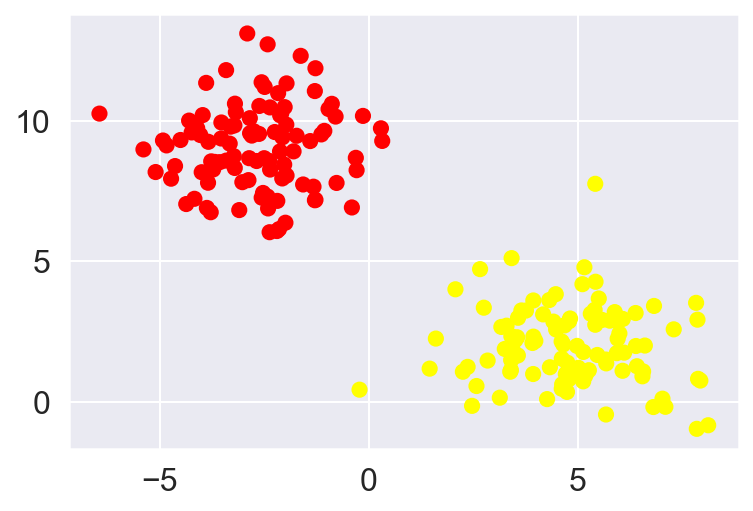

- We want to find a line/curve (in 2D) or a manifold (in n-D) that divides the class from each other. This is a type of Discriminative Classification

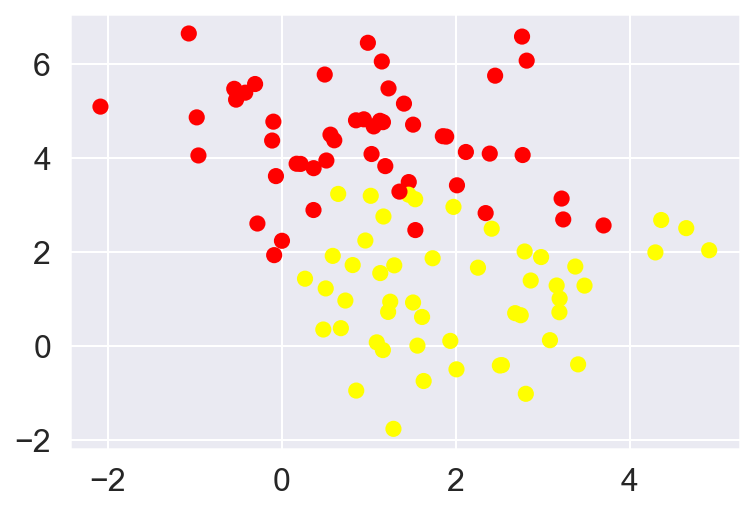

- Consider a simple case of classification task, in which the two classes of points are well separated

#----- PLOTTING PARAMS ----#

import matplotlib.pyplot as plt

from matplotlib.pyplot import cm

import seaborn as sns; sns.set()

%config InlineBackend.figure_format = 'retina'

%config InlineBackend.print_figure_kwargs={'facecolor' : "w"}

plot_params = {

'font.size' : 30,

'axes.titlesize' : 24,

'axes.labelsize' : 20,

'axes.labelweight' : 'bold',

'lines.linewidth' : 3,

'lines.markersize' : 10,

'xtick.labelsize' : 16,

'ytick.labelsize' : 16,

}

plt.rcParams.update(plot_params)from sklearn.datasets import make_blobs

X, y = make_blobs(n_samples=200, centers=2,

random_state=random_state,

cluster_std=1.5)#---Check what is X and y ----#

print('X is a {} array with x-y coordinates of the cluster points\n'.format(np.shape(X)))

print(X[:3])

print('\n')

print('y is a {} array with a classification of the points to which cluster they belong to\n'.format(np.shape(y)))

print(y[:3])

print('\n')

plt.scatter(X[:,0],X[:,1], c=y, s=50, cmap='autumn');X is a (200, 2) array with x-y coordinates of the cluster points

[[2.24823735 1.07410715]

[5.12395668 0.73232327]

[4.6766441 2.72016712]]

y is a (200,) array with a classification of the points to which cluster they belong to

[1 1 1]

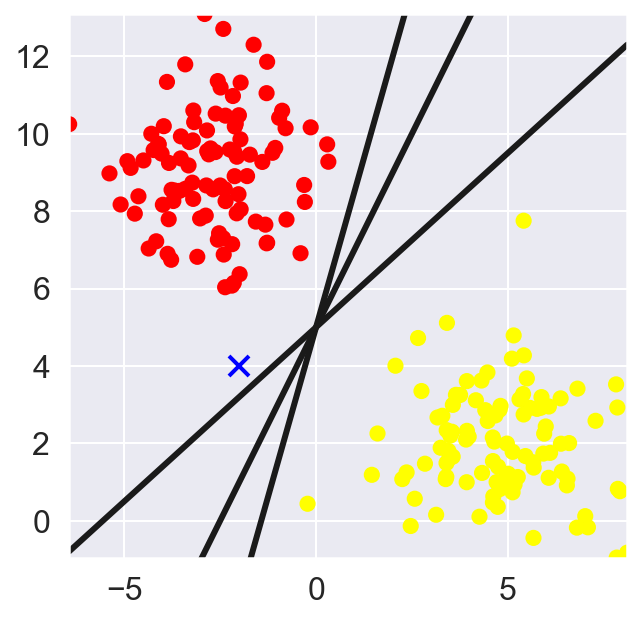

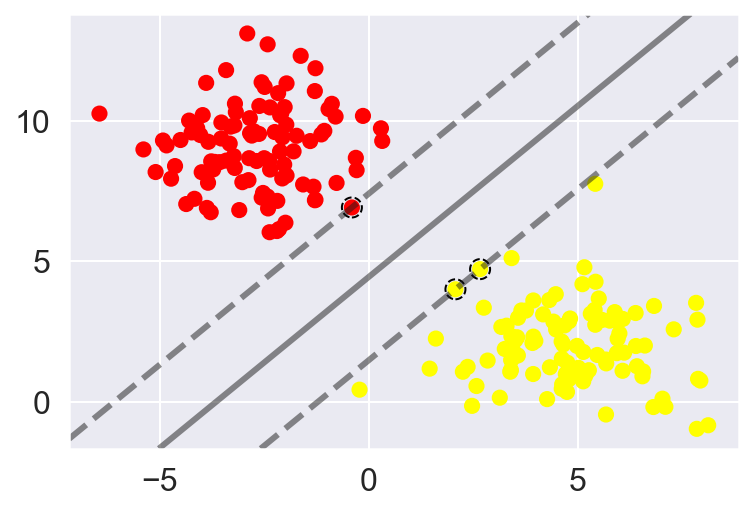

For two-dimensional data, as observed in this case, a linear discriminative classifier would attempt to draw a straight line separating the two data-sets and thereby creating a model for (binary) classification. For the 2D data like the shown above, this task could be done by hand. But there is more than one line that can divide this data in two halves!

x_fit = np.linspace(min(X[:,0]),max(X[:,0]))

fig, ax = plt.subplots(1,1, figsize=(5,5))

ax.scatter(X[:,0], X[:,1], c=y, s=50, cmap='autumn')

# Random point in the 2D plain

ax.plot([-2],[4],'x',color='blue', markeredgewidth=2, markersize=10)

# Plot various 2D planes separating the two 'blobs'

for m,b in [(3.5,5),(2,5),(0.9,5)]:

plt.plot(x_fit, x_fit*m+b, '-k')

plt.xlim(min(X[:,0]),max(X[:,0]))

plt.ylim(min(X[:,1]),max(X[:,1]))

What’s a better methodology to determine the cutting plane? Something like k-nearest neighbor clustering wherein you find the plane with best separation from the two clusters based on some distance metric. However, k-nearest neighbors is based on non-parametric method – needing to be estimated everytime a new data point is introduced.

What if I want something which is learned and then used as a function every other time a new datum is to be classified. This is where support vector machines (SVM) are useful.

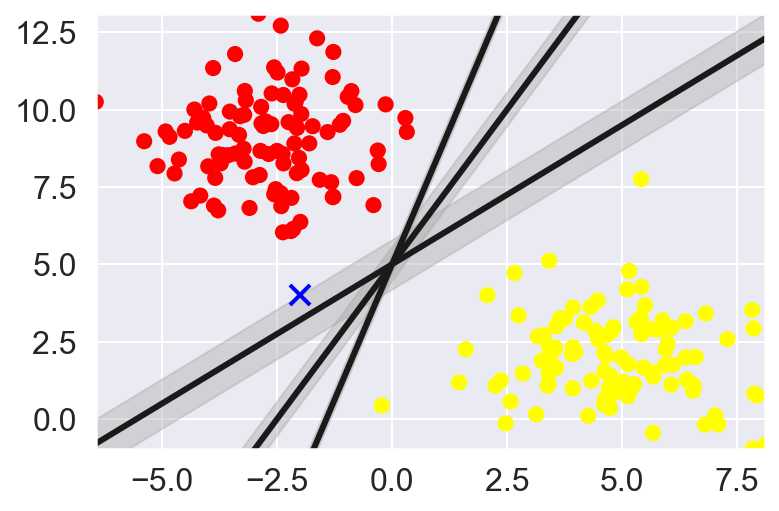

Support Vector Machines:

Rather than simply drawing a zero-width line between classes, we can draw round each line a margin of some width, up to the nearest point.

x_fit = np.linspace(min(X[:,0]),max(X[:,0]))

plt.scatter(X[:,0], X[:,1], c=y, s=50, cmap='autumn')

plt.plot([-2],[4],'x',color='blue', markeredgewidth=2, markersize=10)

for m, b, d in [(3.5,5,0.33),(2,5,0.55),(0.9,5,0.8)]:

y_fit = x_fit*m + b

plt.plot(x_fit, y_fit, '-k')

plt.fill_between(x_fit, y_fit-d, y_fit+d, edgecolor='none',

color='#AAAAAA', alpha=0.4)

plt.xlim(min(X[:,0]),max(X[:,0]))

plt.ylim(min(X[:,1]),max(X[:,1]))

In support vector machines, the line that maximizes this margin is the one we will choose as the optimal model. Support vector machines are an example of such a maximum margin estimator.

Fitting a support vector machine model

Using Scikit-learn’s SVM module to train a classifier on the above data. We will use a linear-kernel and set C parameters to a very large value.

from sklearn.svm import SVC

model = SVC(kernel='linear',C=1E10)

model.fit(X,y)SVC(C=10000000000.0, kernel='linear')To better appreciate the SVM classification logic, we use a convenience function to visualize the decision boundary as made by the SVM module. Code is adopted from Jake’s tutorial.

def plot_svc_decision_function(model, ax=None, plot_support=True, list_vectors=False):

"""Plot the decision function for a 2D SVC"""

if ax is None:

ax = plt.gca()

xlim = ax.get_xlim()

ylim = ax.get_ylim()

# create grid to evaluate model

x = np.linspace(xlim[0], xlim[1], 30)

y = np.linspace(ylim[0], ylim[1], 30)

Y, X = np.meshgrid(y, x)

xy = np.vstack([X.ravel(), Y.ravel()]).T

P = model.decision_function(xy).reshape(X.shape)

# plot decision boundary and margins

ax.contour(X, Y, P, colors='k',

levels=[-1, 0, 1], alpha=0.5,

linestyles=['--', '-', '--'])

# plot support vectors

if plot_support:

ax.scatter(model.support_vectors_[:, 0],

model.support_vectors_[:, 1],

s=100, facecolors='none', edgecolors='black',linestyle='--');

if list_vectors:

print(model.support_vectors_)

ax.set_xlim(xlim)

ax.set_ylim(ylim)plt.scatter(X[:, 0], X[:, 1], c=y, s=50, cmap='autumn')

plot_svc_decision_function(model)

This is the dividing line that maximizes the margin between the two sets of points.

Notice that a few of the training points just touch the margin: they are indicated by the black circles in this figure.

These points are the pivotal elements of this fit, and are known as the support vectors, and give the algorithm its name.

In Scikit-Learn, the identity of these points are stored in the support_vectors_ attribute of the classifier.

model.support_vectors_array([[-0.40500616, 6.91150953],

[ 2.65952903, 4.72035783],

[ 2.07017704, 4.00397825]])A key to this classifier’s success is that for the fit, only the position of the support vectors matter; any points further from the margin which are on the correct side do not modify the fit!

Technically, this is because these points do not contribute to the loss function used to fit the model, so their position and number do not matter so long as they do not cross the margin.

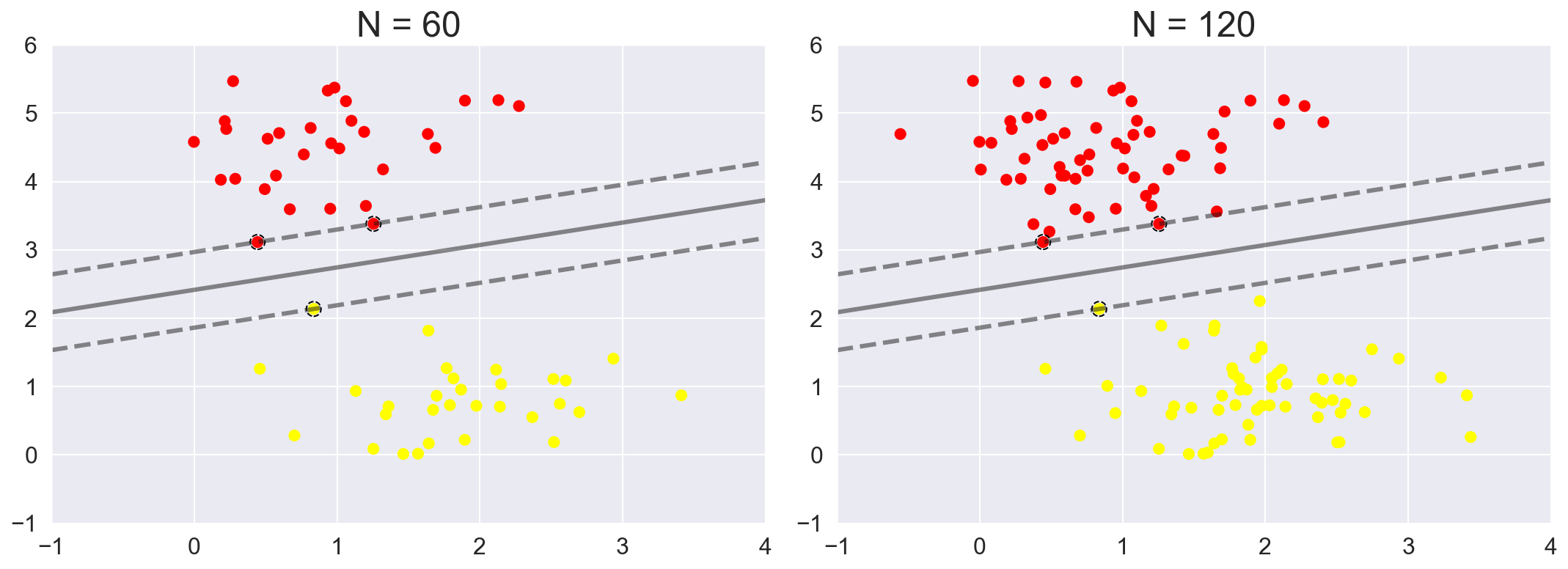

We can see this, for example, if we plot the model learned from the first 60 points and first 120 points of this dataset:

def plot_svm(N=10, ax=None):

X, y = make_blobs(n_samples=200, centers=2,

random_state=0, cluster_std=0.60)

X = X[:N]

y = y[:N]

model = SVC(kernel='linear', C=1E10)

model.fit(X, y)

ax = ax or plt.gca()

ax.scatter(X[:, 0], X[:, 1], c=y, s=50, cmap='autumn')

ax.set_xlim(-1, 4)

ax.set_ylim(-1, 6)

plot_svc_decision_function(model, ax)

fig, ax = plt.subplots(1, 2, figsize=(16, 6))

fig.subplots_adjust(left=0.0625, right=0.95, wspace=0.1)

for axi, N in zip(ax, [60, 120]):

plot_svm(N, axi)

axi.set_title('N = {0}'.format(N))

In spite of increasing the training points, once the margins and the corresponding support vectors are identified the model does not change. This is one of the strengths of this algorithm

from ipywidgets import interact, fixed

interact(plot_svm, N=[10, 50, 100, 150, 200], ax=fixed(None));Beyond linear kernels: Kernel SVM

Kernels are helpful in projecting data into higher dimensional feature space. This can be useful in simplest case to fit non-linear data using linear regression models. Similarly in the case of SVM: Projecting the data into higher dimensions through either polynomial or gaussian kernels we can fit non-linear relationships to a linear classifier

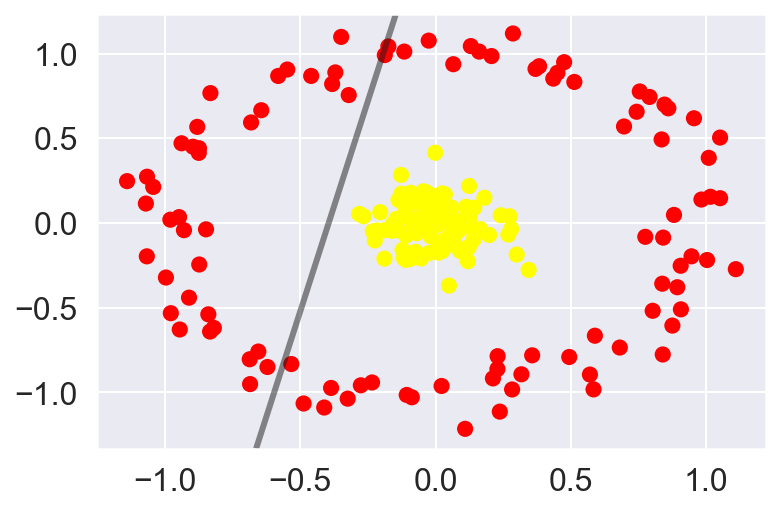

Let’s look at a data-set which is not linearly separated:

from sklearn.datasets import make_circles

X, y = make_circles(200, factor=0.1, noise=0.1)

clf = SVC(kernel='linear').fit(X,y)

plt.scatter(X[:,0], X[:,1], c=y, s=50, cmap='autumn')

plot_svc_decision_function(clf, plot_support=False)

There is not straight forward way to separate this data however we can project the data into higher dimensions based on its properties in the current dimensional space and get more information about its spread. One way of doing so is computing a radial basis function centered at the middle lump

r = np.exp(-(np.sum((X)**2,axis=1)))

from mpl_toolkits import mplot3d

def plot_3D(elev=30, azim=30, X=X, y=y):

ax = plt.subplot(projection='3d')

ax.scatter3D(X[:, 0], X[:, 1], r, c=y, s=50, cmap='autumn')

ax.view_init(elev=elev, azim=azim)

ax.set_xlabel('x')

ax.set_ylabel('y')

ax.set_zlabel('r')

interact(plot_3D, elev=[-90, -45, -30, 30, 45, 60, 90], azip=(-180, 180),

X=fixed(X), y=fixed(y));Projecting the data in an additonal dimensions we can see can having a plane at r=0.7 could give us good separation.

Here we had to choose and carefully tune our projection: if we had not centered our radial basis function in the right location, we would not have seen such clean, linearly separable results.

In general, the need to make such a choice is a problem: we would like to somehow automatically find the best basis functions to use.

One strategy to this end is to compute a basis function centered at every point in the dataset, and let the SVM algorithm sift through the results. This type of basis function transformation is known as a kernel transformation, as it is based on a similarity relationship (or kernel) between each pair of points.

A potential problem with this strategy—projecting N points into N dimensions—is that it might become very computationally intensive as N grows large. However, because of a neat little procedure known as the kernel trick, a fit on kernel-transformed data can be done implicitly—that is, without ever building the full N-dimensional representation of the kernel projection! This kernel trick is built into the SVM, and is one of the reasons the method is so powerful.

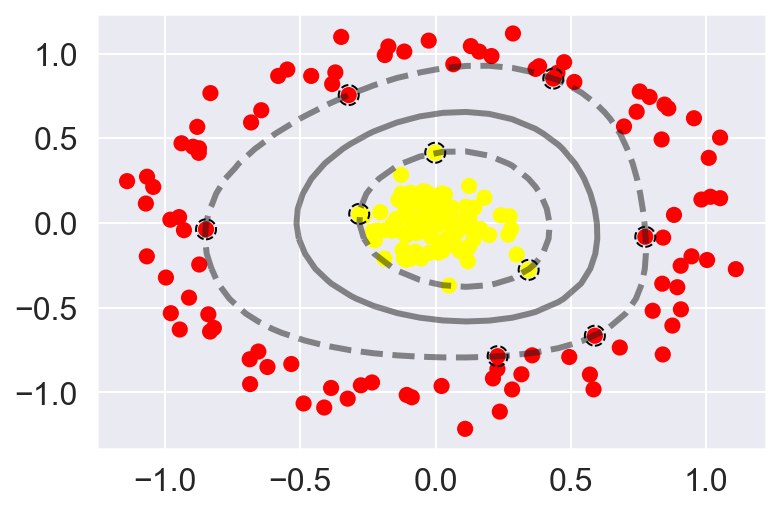

In Scikit-Learn, we can apply kernelized SVM simply by changing our linear kernel to an RBF (radial basis function) kernel, using the kernel model hyperparameter:

clf = SVC(kernel='rbf', C=1E6)

clf.fit(X, y)SVC(C=1000000.0)plt.scatter(X[:, 0], X[:, 1], c=y, s=50, cmap='autumn')

plot_svc_decision_function(clf)

plt.scatter(clf.support_vectors_[:, 0], clf.support_vectors_[:, 1],

s=300, lw=1, facecolors='none');

Softer margins

X, y = make_blobs(n_samples=100, centers=2,

random_state=0, cluster_std=1.2)

plt.scatter(X[:, 0], X[:, 1], c=y, s=50, cmap='autumn');

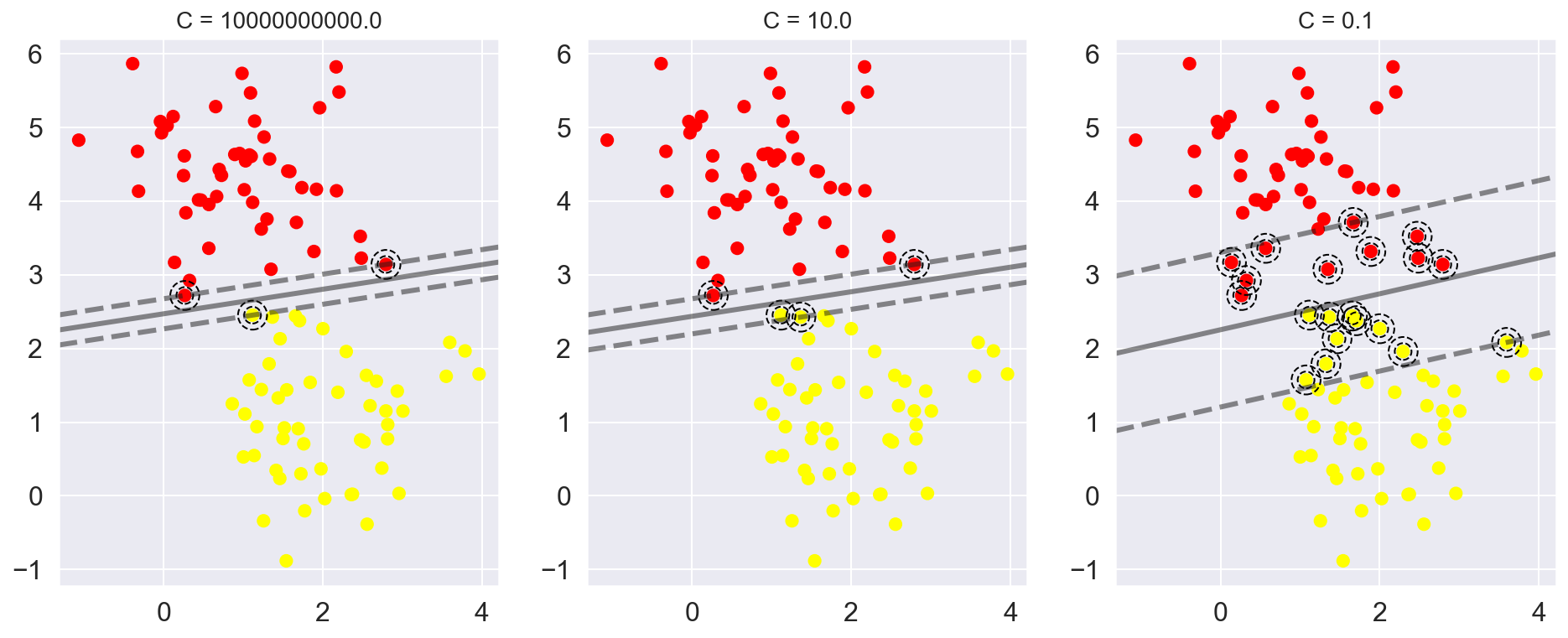

To handle this case, the SVM implementation has a bit of a fudge-factor which “softens” the margin: that is, it allows some of the points to creep into the margin if that allows a better fit. The hardness of the margin is controlled by a tuning parameter, most often known as C.

For very large C, the margin is hard, and points cannot lie in it. For smaller C, the margin is softer, and can grow to encompass some points.

The plot shown below gives a visual picture of how a changing C parameter affects the final fit, via the softening of the margin:

X, y = make_blobs(n_samples=100, centers=2,

random_state=0, cluster_std=0.8)

fig, ax = plt.subplots(1, 3, figsize=(16, 6))

for axi, C in zip(ax, [1E10, 10.0, 0.1]):

model = SVC(kernel='linear', C=C).fit(X, y)

axi.scatter(X[:, 0], X[:, 1], c=y, s=50, cmap='autumn')

plot_svc_decision_function(model, axi)

axi.scatter(model.support_vectors_[:, 0],

model.support_vectors_[:, 1],

s=300, facecolors='none', edgecolors='black',linestyle='--')

axi.set_title('C = {0:.1f}'.format(C), size=14)

Example: Facial Recognition

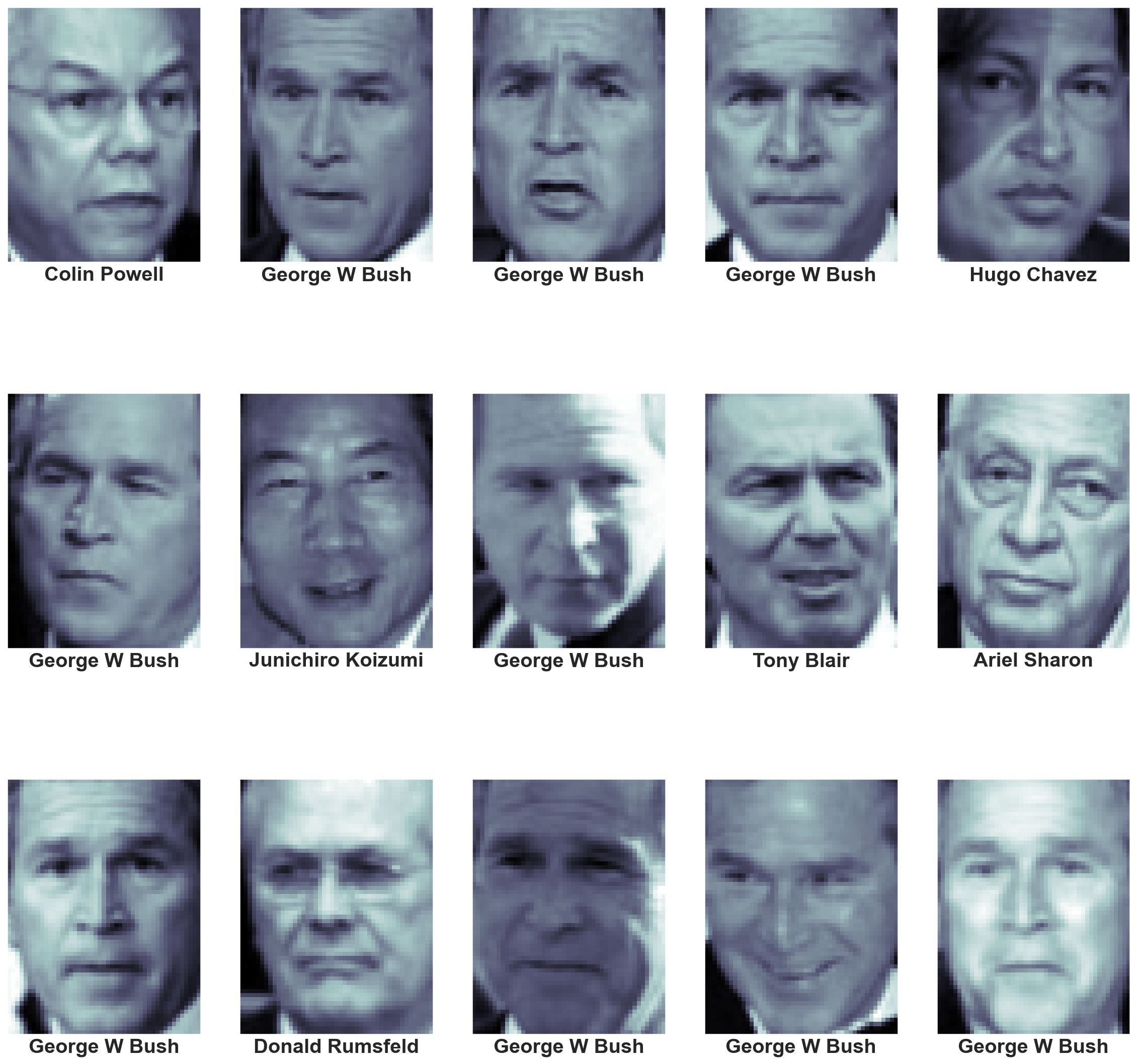

As an example of support vector machines in action, let’s take a look at the facial recognition problem.

We will use the Labeled Faces in the Wild dataset, which consists of several thousand collated photos of various public figures.

A fetcher for the dataset is built into Scikit-Learn:

from sklearn.datasets import fetch_lfw_people

faces = fetch_lfw_people(min_faces_per_person=60)

print(faces.target_names)

print(faces.images.shape)['Ariel Sharon' 'Colin Powell' 'Donald Rumsfeld' 'George W Bush'

'Gerhard Schroeder' 'Hugo Chavez' 'Junichiro Koizumi' 'Tony Blair']

(1348, 62, 47)fig, ax = plt.subplots(3,5,figsize=(20,20))

for i,axi in enumerate(ax.flat):

axi.imshow(faces.images[i],cmap='bone')

axi.set(xticks=[], yticks=[],

xlabel=faces.target_names[faces.target[i]])

Each image contains [62×47] or nearly 3,000 pixels. We could proceed by simply using each pixel value as a feature, but often it is more effective to use some sort of preprocessor to extract more meaningful features.

Here we will use a principal component analysis to extract 150 fundamental components to feed into our support vector machine classifier. We can do this most straightforwardly by packaging the preprocessor and the classifier into a single pipeline:

from sklearn.svm import SVC

from sklearn.decomposition import PCA

from sklearn.pipeline import make_pipeline

pca = PCA(n_components=150, whiten=True, svd_solver='randomized', random_state=42)

svc = SVC(kernel='rbf', class_weight='balanced')

model = make_pipeline(pca,svc)from sklearn.model_selection import train_test_split, GridSearchCV

Xtrain, Xtest, ytrain, ytest = train_test_split(faces.data, faces.target, random_state=42)Finally, we can use a grid search cross-validation to explore combinations of parameters. Here we will adjust C (which controls the margin hardness) and gamma (which controls the size of the radial basis function kernel), and determine the best model:

param_grid = {'svc__C': [0.001, 0.1, 1, 5, 10, 50],

'svc__gamma': [0.0001, 0.0005, 0.001, 0.005]}

grid = GridSearchCV(model, param_grid, cv=5)

%time grid.fit(Xtrain, ytrain)

print(grid.best_params_)CPU times: user 2min 26s, sys: 35.1 s, total: 3min 1s

Wall time: 33 s

{'svc__C': 10, 'svc__gamma': 0.001}model = grid.best_estimator_

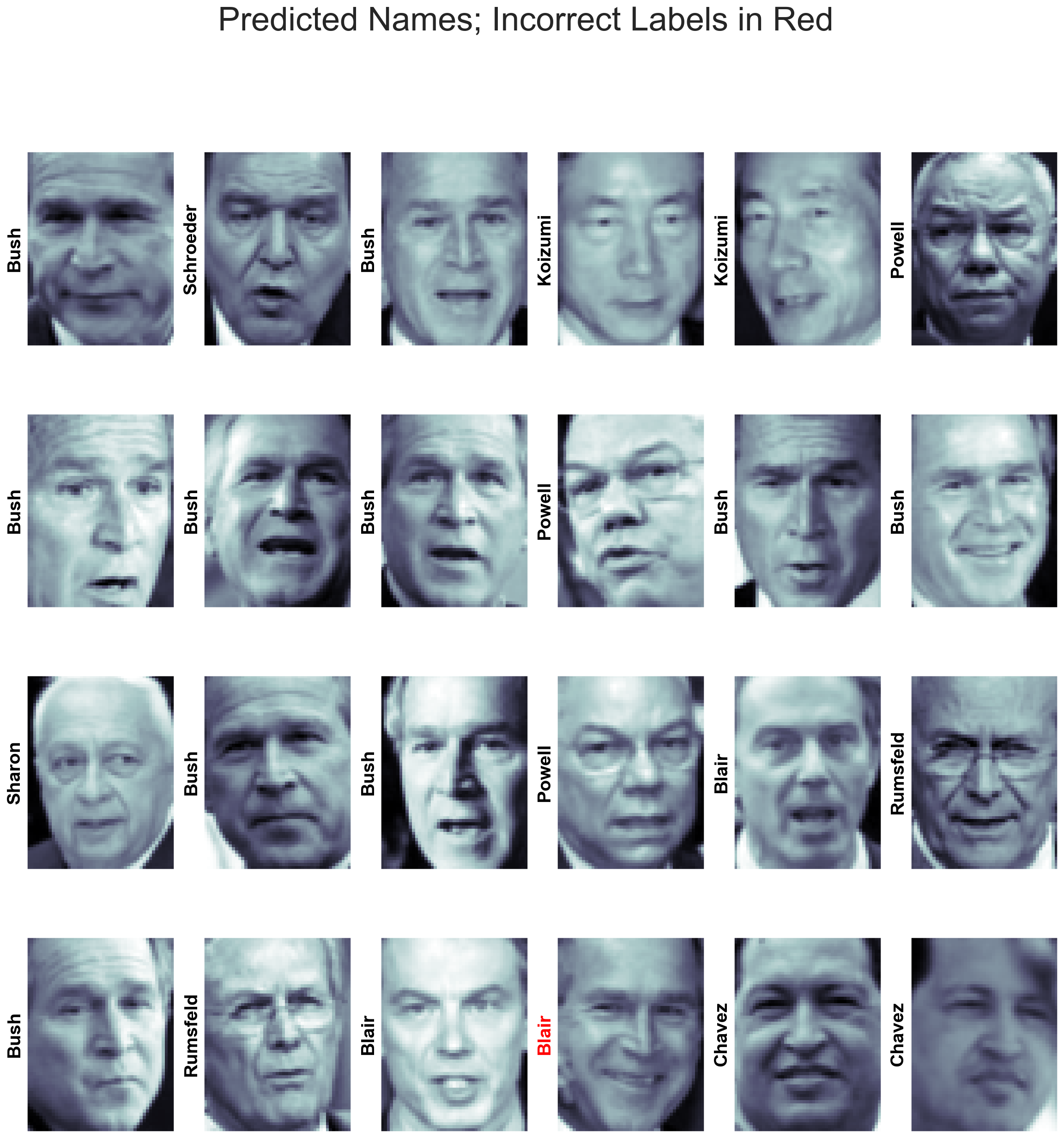

yfit = model.predict(Xtest)fig, ax = plt.subplots(4, 6,figsize=(20,20))

for i, axi in enumerate(ax.flat):

axi.imshow(Xtest[i].reshape(62, 47), cmap='bone')

axi.set(xticks=[], yticks=[])

axi.set_ylabel(faces.target_names[yfit[i]].split()[-1],

color='black' if yfit[i] == ytest[i] else 'red')

fig.suptitle('Predicted Names; Incorrect Labels in Red');

from sklearn.metrics import classification_report

print(classification_report(ytest, yfit,

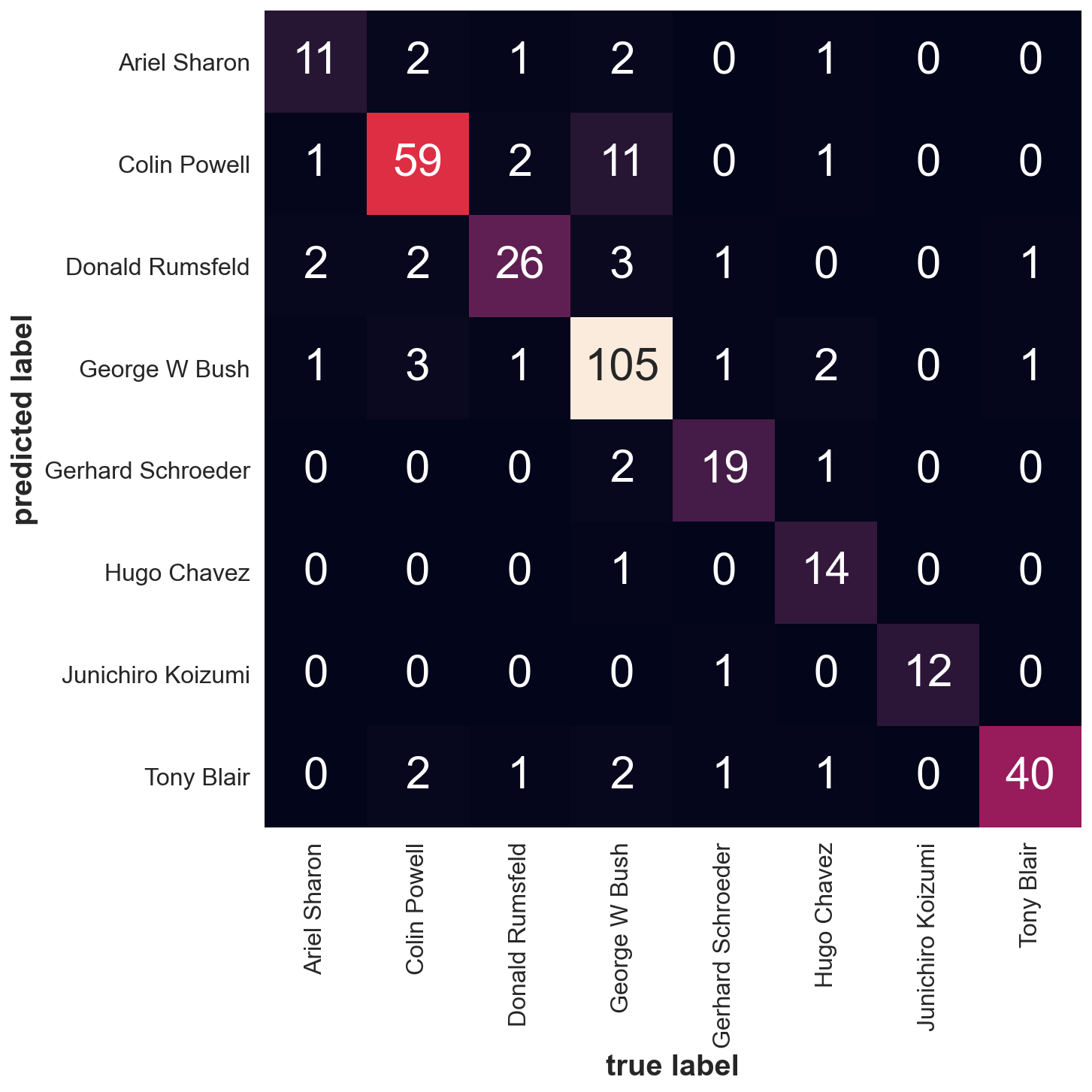

target_names=faces.target_names)) precision recall f1-score support

Ariel Sharon 0.65 0.73 0.69 15

Colin Powell 0.80 0.87 0.83 68

Donald Rumsfeld 0.74 0.84 0.79 31

George W Bush 0.92 0.83 0.88 126

Gerhard Schroeder 0.86 0.83 0.84 23

Hugo Chavez 0.93 0.70 0.80 20

Junichiro Koizumi 0.92 1.00 0.96 12

Tony Blair 0.85 0.95 0.90 42

accuracy 0.85 337

macro avg 0.83 0.84 0.84 337

weighted avg 0.86 0.85 0.85 337

from sklearn.metrics import confusion_matrix

mat = confusion_matrix(ytest, yfit)

fig, ax = plt.subplots(1,1, figsize=(10,10))

sns.heatmap(mat.T, square=True, annot=True, fmt='d', cbar=False,

xticklabels=faces.target_names,

yticklabels=faces.target_names, ax=ax)

plt.xlabel('true label')

plt.ylabel('predicted label');